What Are Neural Networks?

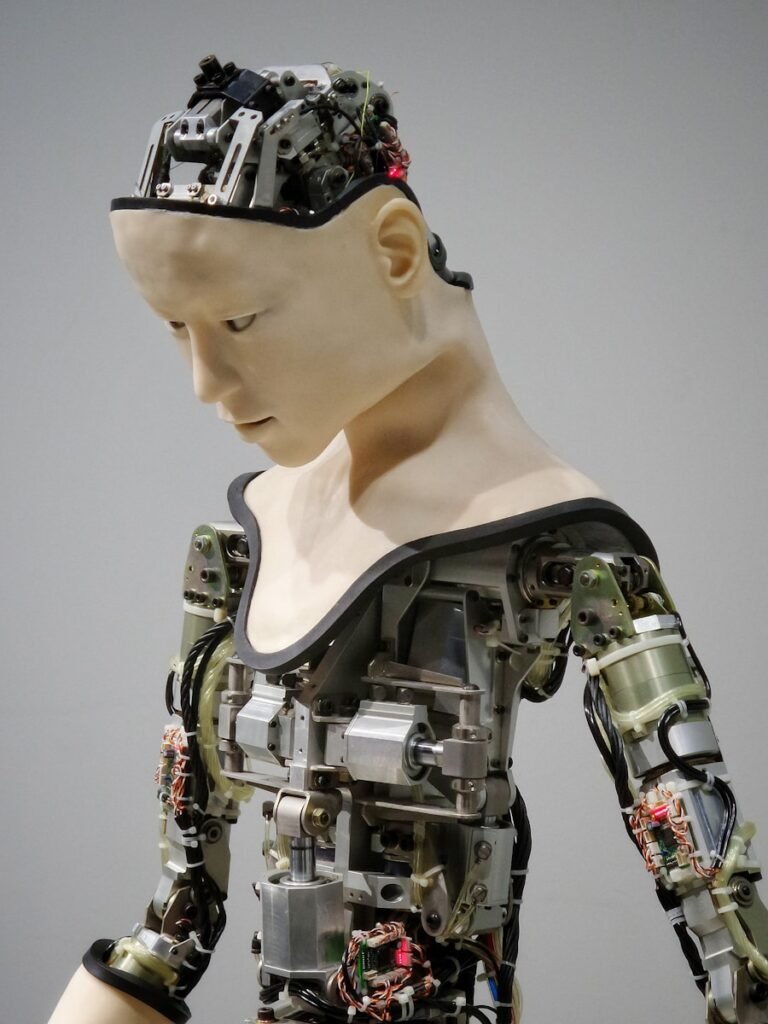

Picture your mind, with its vast array of neurons firing off in all directions, grappling with intricate puzzles, forging connections, absorbing knowledge from every experience that’s essentially what a neural network is! It’s akin to a miniature brain encased in a box, but operating at lightning speed (well, for the most part). These networks are crafted to emulate the way our own brains handle information, rendering them an invaluable asset for tasks such as recognizing images, processing natural language, and even mastering games like chess or Go. As the old adage gs, “Artificial intelligence is maturing rapidly alongside robots whose facial expressions can evoke empathy and set your mirror neurons abuzz.”

At the core of these neural networks lie layers upon layers of interconnected nodes or neurons collaborating to sift through and dissect data. Each neuron takes in inputs, applies a specific function to them, and then passes on the resulting output to the subsequent layer of neurons. It’s reminiscent of playing telephone; however instead of spreading gossip, this network is crunching numbers and making informed projections. Just like in real life though,

certain neurons wield more influence than others these are the ones that truly steer

the decision-making process. So next time you encounter talk about neural networks,

just bear in mind: it’s all about those connections!

How Do Neural Networks Learn?

Neural networks are enigmatic sorcerers in the realm of AI, wielding their mystical abilities to grasp and conquer new obstacles. Imagine them as miniature digital minds, consuming data like a shot of espresso, fine-tuning their neural connections with each fresh piece of knowledge. It’s akin to that epiphany when you finally comprehend why your beloved TV character made that questionable choice – synapses spark, connections form, and suddenly everything falls into place.

As these neural networks evolve, they adjust their parameters and biases, refining their forecasting prowess with every cycle. It’s reminiscent of training a playful puppy – rewarding accuracy while gently correcting errors. With time, these networks morph into efficient prediction engines, poised to tackle intricate tasks like seasoned veterans. Remember the wise words of Alan Turing: “We can only see a short distance ahead but there is a lot that needs to be accomplished.” In the domain of neural networks, learning is an ongoing voyage of exploration and adaptation; paving the way for inventive solutions to real-world dilemmas.

The Role of Activation Functions

Activation functions are the enigmatic ingredients that give neural networks their unique flavor – they are the mystical decision-makers that determine which signals should flow and which should be halted. Without them, neural networks would be as bewildered as a feline adorned with headwear.

One of the most prevalent activation functions is relu, or rectified linear unit, as it’s known in full. As famously stated by Geoff Hinton, a revered computer scientist and AI guru, “ReLU is like the key to unlocking truly deep networks.” Its simplicity belies its potency – allowing only positive values to pass through while quashing negative values to zero. This streamlined approach accelerates training processes and mitigates the dreaded vanishing gradient issue. In the realm of neural networks, any tool that helps us sidestep obstacles is a triumph in my eyes.

Understanding Gradient Descent

Here we find ourselves delving into the mysterious realm of Gradient Descent. Let’s dissect it without drowning in technical jargon.

Picture yourself on a mission to locate the lowest point amidst vast, undulating hills. Unable to view the entire terrain at once, you cautiously take small steps in the direction of greatest descent, gradually approaching your objective. This hill mirrors our intricate mathematical landscape, with each step reflecting the process of Gradient Descent within the domain of neural networks. As the adage gs, “It’s about the journey, not just reaching the destination,” and when training a neural network, success hinges on identifying optimal parameters that minimize prediction errors.

The Importance of Backpropagation

Backpropagation, with its enigmatic moniker, serves as the mystical incantation that allows neural networks to glean wisdom from their blunders. Picture your mind whispering, “Remember when you nearly stumbled over Fluffy? Let’s steer clear of that mishap.” This is essentially the role backpropagation plays for AI – enabling them to adapt and progress by learning from missteps. As the esteemed computer scientist Grace Hopper once mused, “The most detrimental phrase in our lexicon is ‘We’ve always done it this way.'” Backpropagation bestows upon AI the ability to break free from this deleterious cycle of perpetual error replication.

In the realm of neural networks, backpropagation assumes the guise of an unrivaled mentor dispensing sagacious feedback and shepherding AI towards mastery in its endeavors. It resembles having a personal tutor who identifies weaknesses and assists in fortifying them. In accordance with the age-old adage, “It does not matter how slowly you go as long as you do not stop,” backpropagation ensures that AI persists on its forward trajectory, absorbing knowledge and evolving incrementally.

Different Types of Neural Networks

Neural networks are akin to a mysterious box of chocolates – you never quite know what tantalizing treat awaits you! It may sound like an oversimplification, but the truth is that there exists a myriad of neural network types, each boasting its own enigmatic allure and distinctive purpose. From the versatile Multilayer Perceptrons to the captivating Recurrent Neural Networks, the realm of AI is a veritable feast of unexplored possibilities.

Much like navigating through a labyrinthine library of films on a Friday evening, selecting the perfect neural network for your task can be an overwhelming endeavor. Should you opt for the tried-and-true feedforward neural network for straightforward regression duties, or venture into uncharted territory with avant-garde Generative Adversarial Networks for cutting-edge image creation? The choices seem infinite, echoing the sentiments of the esteemed Alan Turing who once remarked, “We can only see a short distance ahead, but we can see plenty there that needs to be done.” Let us now embark on an odyssey into the perplexing world of neural networks and unveil the spellbinding wonders that lie concealed within!

Challenges in Training Neural Networks

Navigating the labyrinth of training neural networks is akin to stumbling through a maze blindfolded, unsure of when you might reach a dead end or become entangled in the intricacies of the data. The treacherous terrain is fraught with challenges, none more menacing than the specter of overfitting. In the words of the esteemed Yann LeCun, “Overfitting is the mind-killer. Overfitting is the little-death that brings total obliteration.” This phenomenon can ensnare your model in a suffocating grip, rendering it incapable of adapting to novel samples like a parrot attempting to serenade an audience with opera – impressive yet utterly futile.

Furthermore, one must contend with the vexing vanishing gradient problem which plagues many neural networks. As gradients dwindle into insignificance while traversing backward through network layers, learning becomes an excruciatingly sluggish ordeal resembling coaxing dance moves out of a sloth – progress may be made eventually but at an agonizingly slow pace. Echoing sentiments shared by Geoffrey Hinton himself, “The gradient’s vanishing act is the ultimate disappearing act in the circus of deep learning.” Witnessing this arcane disappearance feels akin to witnessing magic tricks gone awry as gradients dissipate into nothingness and leave your model bewildered and scratching its virtual head in perplexity.

Exploring Convolutional Neural Networks

Convolutional Neural Networks, known as CNNs, are the enigmatic geniuses of the AI realm – swooping in with their unparalleled prowess to decipher and scrutinize visual data. Picture them as enigmatic Avengers, adept at spotting images, unraveling patterns, and decoding the perplexing visual cacophony that surrounds us. Just like Iron Man’s suit empowers him in combat, CNNs utilize layers of filters to extricate features from images, enabling them to grasp those intricate nuances that elude our human eyes.

Whether it’s recognizing faces on social media snapshots or propelling self-driving vehicles through urban mazes, CNNs operate behind the curtains, processing information at breakneck speed before you can even mutter “artificial intelligence.” As tech visionary Jason Calacanis once remarked: “AI need not be malevolent to obliterate humanity – if AI harbors a goal and humanity inadvertently obstructs its path, it will obliterate humanity without a second thought.” Yet fret not for CNNs stand ready to enrich our existence by bridging gaps where we mere mortals falter.